What Is Ethical AI? Why Is It Important?

Ethical AI has become paramount as artificial intelligence (AI) increasingly permeates our daily lives and business operations. The technology holds immense potential, from AI-driven healthcare diagnostics to automated hiring processes, but it also raises ethical concerns. AI ethics, the practice of designing, developing, and deploying AI systems to prioritize fairness, transparency, and responsibility, is crucial in addressing these concerns.

In this blog, we explore the concept of ethical AI, why it’s essential, and how businesses can adopt it responsibly.

Ethics and AI

AI technologies, while powerful, can sometimes operate in ways that conflict with ethical standards. These conflicts arise when AI systems inadvertently perpetuate bias, infringe on privacy, or make decisions that lack transparency.

For example, a study shows that algorithmic bias in AI-driven treatment technologies has led to misdiagnoses and treatment biases. 27% of human damage was caused by too-speedy Generative AI implementation before it’s tested to understand the risk and harm to individuals and communities.

So, what makes AI ethical? Or, for that matter, what makes anything ethical? In practical terms, being ethical involves adhering to and respecting human values, rights, and moral standards. It also means acting in ways that are considered just and appropriate without causing harm. Ethics addresses the question of what is right or wrong rather than simply focusing on whether something is legal.

An ethical AI is deemed to be when it’s developed within an ethical framework, where the objective goes beyond financial profit and focuses on improving society. Responsible AI is the practice of AI development guided by the principles of fairness, transparency, explainability, human-centered design, and safeguarding privacy and security.

AI Ethical Concerns

AI is being utilized in numerous ways to enhance living standards, protect human rights, and boost productivity and efficiency by optimizing processes, gathering insights, and detecting fraud. However, the concern arises around the ethical risks when AI technology is misused. What potential risks should AI experts consider before developing ethical AI systems and solutions aimed at benefiting society?

Labor Force

One of the most debated ethical concerns in AI is its impact on the labor force. AI’s ability to automate repetitive and data-driven tasks is seen as a double-edged sword.

On the one hand, automation enhances productivity and drives innovation in industries like manufacturing, healthcare, and finance. On the other, it poses the risk of displacing millions of jobs, particularly in sectors where routine tasks can easily be automated.

An IMF report found that AI will affect 40% of jobs globally. In developed economies, this figure increases to 60%, with around half of the jobs facing negative impacts due to ML.

The question of AI ethics comes into play when considering how businesses and governments will manage this shift in the labor market. Should companies be responsible for retraining displaced workers, or should that burden fall to governments?

Ethical AI frameworks advocate for policies that balance innovation with social responsibility, ensuring that workers are not left behind as technology progresses. Additionally, discussions on the ethics of AI must address the potential for economic inequality, as high-paying, tech-related jobs may replace lower-skilled positions, potentially widening the wealth gap.

Discrimination

AI has the potential to either perpetuate or reduce discrimination, depending on how it is designed and deployed. Discrimination in AI often arises due to biased data sets or algorithmic decisions that reflect societal prejudices.

For instance, AI systems used in generating content on social media platforms have been found to perpetuate harmful risks, disproportionately affecting women, trans people, and gender-neutral individuals. Such misuse highlights the urgent need for stricter regulations and ethical guidelines to address the growing challenge of safeguarding individuals.

Ethical AI frameworks prioritize fairness and aim to eliminate bias in AI systems. This goal can be achieved by improving the training data diversity, applying bias detection tools, and ensuring transparency in making decisions.

Additionally, in the field of AI ethics, experts emphasize the need for diverse teams of developers. These teams play a crucial role in identifying and mitigating potential biases during the development phase.

Furthermore, regulatory bodies are also stepping in. The European Union’s proposed AI Act includes provisions to minimize the risk of discrimination in high-risk AI systems.

Privacy

AI’s capacity to collect, process, and analyze massive amounts of data also raises critical concerns about privacy. In many AI applications, particularly in areas like education and healthcare, individuals’ personal information is collected without their explicit consent.

Moreover, the rise of facial recognition technologies, for example, has led to significant pushback from privacy advocates. A recent report found that 57% of consumers worldwide agree that AI presents a serious threat to their privacy.

Ethical AI prioritizes privacy by advocating for transparency and user control over personal data. To achieve this, AI systems should adhere to principles of data minimization, collecting only the information necessary for their operation.

Additionally, ethical AI software incorporates privacy-preserving technologies, which enable systems to analyze data without revealing sensitive personal information. A key issue in AI ethics is striking the right balance between leveraging data for innovation and protecting privacy rights.

Privacy is a concerning issue that needs to be considered in ethical AI development.

Accountability and Reliability

Another important aspect of AI ethics is ensuring accountability and reliability in AI systems. When AI makes decisions, such as approving a loan, diagnosing a medical condition, or determining someone’s eligibility for parole, questions about accountability arise.

These questions focus on who is responsible for any errors or biases in the AI’s decisions. This is a significant issue, particularly when the AI operates as a “black box.” It means that its decision-making processes are not transparent or easily explainable.

Ethical AI emphasizes the need for AI systems to be explainable and accountable. This means that developers must build systems in a way that their decisions can be traced and justified. Furthermore, there should be clear lines of accountability so that when an AI system makes a mistake, the responsible parties can be identified and held accountable.

Reliable AI systems also undergo thorough testing to ensure they perform as expected and minimize the risk of harm.

Authenticity and Integrity

As AI systems become more capable of generating realistic content, concerns about authenticity and integrity have risen. AI technologies like deepfakes, which use machine learning to create convincing fake images or videos, pose significant ethical challenges. Consequently, these technologies can spread misinformation or damage reputations, raising questions about how to detect and regulate AI-generated content.

The issue of authenticity also extends to ethical AI’s role in journalism and content creation. AI-generated news articles and social media posts may lead to questions about credibility and trustworthiness. AI in ethics stresses the importance of transparency, ensuring that users know when interacting with AI-generated content.

Additionally, there is a growing need for AI systems that can detect and flag manipulated or false content, contributing to the fight against misinformation.

Further Reading: A Complete Guide to Machine Learning in Marketing.

Sympathy and Empathy

While AI can simulate human-like interactions, it fundamentally lacks the capacity for genuine sympathy and empathy. This raises ethical concerns, particularly when AI is used in sensitive healthcare or customer service, where emotional intelligence is crucial. For instance, while AI chatbots or voicebots can assist users with routine inquiries, they may struggle to respond appropriately to emotional situations.

The challenge for ethical AI is ensuring that artificial intelligence systems are designed with an understanding of their limitations. Developers must be cautious about deploying AI in situations requiring emotional sensitivity. Moreover, ethical AI frameworks emphasize the importance of human oversight in scenarios where empathy is critical.

AI lacks emotional intelligence compared to human agency.

Technological Singularity

Finally, the idea of technological singularity, a point where AI surpasses human intelligence, presents one of the most profound ethical dilemmas in the field of AI ethics. While this scenario remains speculative, some experts believe it could occur within the next few decades. If AI systems achieve superintelligence, they could develop uncontrollable goals and behaviors, raising concerns about ensuring their ethical operation.

The ethics of AI must address the potential risks of technological singularity. This includes creating safeguards to prevent AI systems from becoming uncontrollable or harmful.

Ethical AI research aims to keep artificial intelligence systems aligned with human values as they grow more autonomous and intelligent. International organizations, governments, and private entities are developing regulations and protocols to ensure the safe and ethical advancement of AIs.

What is Ethical AI software?

The earliest instance of ethics in technology dates back to the early 1950s. At that time, a chamberlain presented Queen Elizabeth I with a knitting machine designed to replace human knitters, as stockings were highly popular. The Queen rejected the invention, fearing it would displace hardworking knitters and destitute them. She pronounced it would “ruin by depriving them of employment and thus making them beggars.”

One of the main arguments put forth by anti-tech activists is the fear of being replaced by machines, among other concerns. As AI evolves and learns to mimic human thought and behavior, this debate intensifies, along with anxieties about machines surpassing or overtaking humanity.

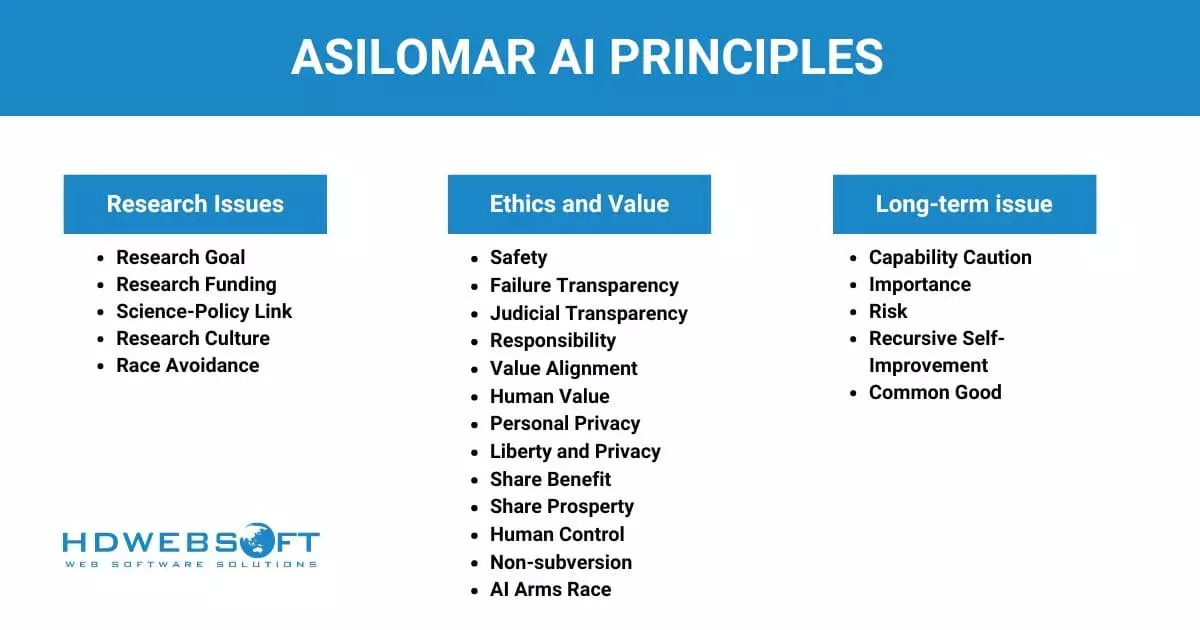

This is why tech enthusiasts believe that, in addition to ensuring AI software is legal, it must also be moral. So, what defines ethical AI? One of the most recognized frameworks for Ethical AI is the Asilomar AI Principles. It was signed by many tech giants and other influential people, such as Elon Musk, Stephen Hawking, OpenAI co-founder,…

Let’s take a look at the said principle:

Why Must Business Practice Ethical AI

Businesses must practice ethical AI for several crucial reasons that extend beyond mere compliance and legal frameworks. Here’s why it is essential for businesses:

Building Trust and Reputation

One of the foremost reasons businesses should prioritize ethics in AI is to maintain and build trust with their customers. AI systems that are perceived as biased, opaque, or invasive can significantly harm a company’s reputation.

On the other hand, businesses that commit to transparent and fair AI practices are more likely to earn the trust of their users and stakeholders.

In sectors like healthcare, finance, and customer service, trust is essential. Adopting AI ethics can help prevent situations where users feel exploited or unfairly treated by algorithms.

Ethical AI helps businesses build trust with customers for a better reputation.

Reducing Legal and Regulatory Risks

The landscape of AI regulation is rapidly evolving, and non-compliance with ethical standards can expose businesses to legal risks. Governments around the world are working on AI regulations that will hold companies accountable for discriminatory outcomes or privacy violations caused by AI systems.

By adopting ethical AI principles, businesses can reduce the risk of fines, legal action, or reputational damage from misused AI. Companies that fail to act ethically may face harsher scrutiny as laws surrounding AI, such as the European Union’s AI Act, continue to strengthen.

Fostering Innovation and Inclusivity

Practicing artificial intelligence in ethics ensures its benefits are distributed equitably across all user groups. By eliminating bias and designing systems that are inclusive, businesses can create more innovative and impactful products. As a result, AI that accounts for diverse user needs can lead to better customer satisfaction and wider market penetration.

Moreover, an inclusive approach to AI development fosters creativity and innovation. It pushes developers to think beyond the limitations of traditional, biased data sets.

Enhancing Decision-Making

AI systems are increasingly used to support decision-making in areas such as hiring, customer service, and healthcare. Practicing ethics AI ensures that these decisions are made fairly and transparently, improving outcomes for users. Ethical AI systems help businesses make more informed, data-driven decisions while ensuring they do not inadvertently harm individuals or groups.

Furthermore, businesses can foster a culture of accountability and transparency by ensuring that AI-driven decisions can be explained and justified.

Mitigating Bias and Discrimination

One of the core concerns in the ethics of AI is algorithmic bias. Biased AI systems can reinforce and perpetuate existing inequalities, leading to negative outcomes for marginalized communities. By focusing on AI-ethical practices, businesses can actively work to reduce bias in their algorithms, ensuring fairness in decisions related to hiring, lending, and criminal justice.

This commitment to fairness protects businesses from public backlash and aligns them with broader social goals of equality and justice.

Protecting Customer Privacy

Privacy is a key issue in AI ethics, especially as AI systems collect and process vast amounts of personal data. Consequently, businesses that prioritize ethical AI ensure that users’ privacy is respected and that data is handled responsibly. In light of rising concerns over data breaches and misuse, customers are becoming increasingly cautious about managing their data.

Adopting privacy-focused AI practices is a way for businesses to strengthen customer loyalty and safeguard against potential legal or reputational risks associated with privacy violations.

A good ethical AI is much needed to protect customers’ personal privacy.

Future-Proofing the Business

The ethical use of AI is not just a trend. It is likely to become a core requirement for businesses as technology and regulations evolve. Companies that embrace ethical AI are better positioned to adapt to future changes in both public sentiment and regulatory landscapes.

AI ethics practices ensure that businesses are prepared for long-term sustainability, not just through regulatory compliance. They also help maintain positive relationships with consumers, employees, and society at large.

Future Prospects for AI in Ethics

The future of AI ethics holds promising developments as advancements in artificial intelligence continue to accelerate.

The primary focus will be creating robust regulatory frameworks to guide the development and deployment of AI systems. Consequently, governments and international organizations are developing guidelines to ensure AI fairness, transparency, and accountability. This effort is evident in initiatives like the European Union’s proposed AI Act and the Partnership on AI. These frameworks aim to prevent misuse, particularly in high-risk areas such as healthcare, law enforcement, and finance technology.

Another key prospect is the evolution of ethical AI software that is capable of detecting and mitigating biases in real-time. Developers are increasingly incorporating ethical principles directly into AI systems, ensuring that algorithms do not perpetuate discrimination or bias. Plus, NER models can play a crucial role in identifying and mitigating bias by accurately extracting and classifying sensitive information. This helps developers address potential biases in data and model training.

Furthermore, ethical AI will help increase the transparency of AI decision-making processes, making it easier for individuals to understand how algorithms work. It will also provide avenues for redress if decisions negatively impact them.

Last but not least, ethics in AI will become even more critical as AI systems grow in autonomy and sophistication. Technologies like self-driving cars and AI-generated content pose new ethical challenges. It requires advanced AI governance models to balance innovation with responsibility.

The future of ethical artificial intelligence is about more than preventing harm. It’s about guiding the development of AI to benefit all of society, ensuring inclusive, fair, and transparent systems that align with human values.

Conclusion

Ethical AI is no longer a niche concern; it’s a critical aspect of responsible technology development and deployment. As AI becomes more pervasive, ensuring that it operates fairly and responsibly is essential for protecting users and maintaining trust in the technology. Businesses, governments, and developers all have a role to play in building and implementing ethical AI systems that benefit society. The future of AI must be both innovative and ethical for it to be truly transformative.

Ethical AI forms the foundation for a more just and equitable technological future. It ensures that the power of AI can be harnessed without compromising human rights or values.